How Designers Set Up a Data Watching System to Inform Product Strategy

How Designers Set Up a Data Watching System to Inform Product Strategy

Let’s start out with a question: If you had to come up with enterprise-favorite buzzwords that transformed the decade, which phrases would you add to the list?

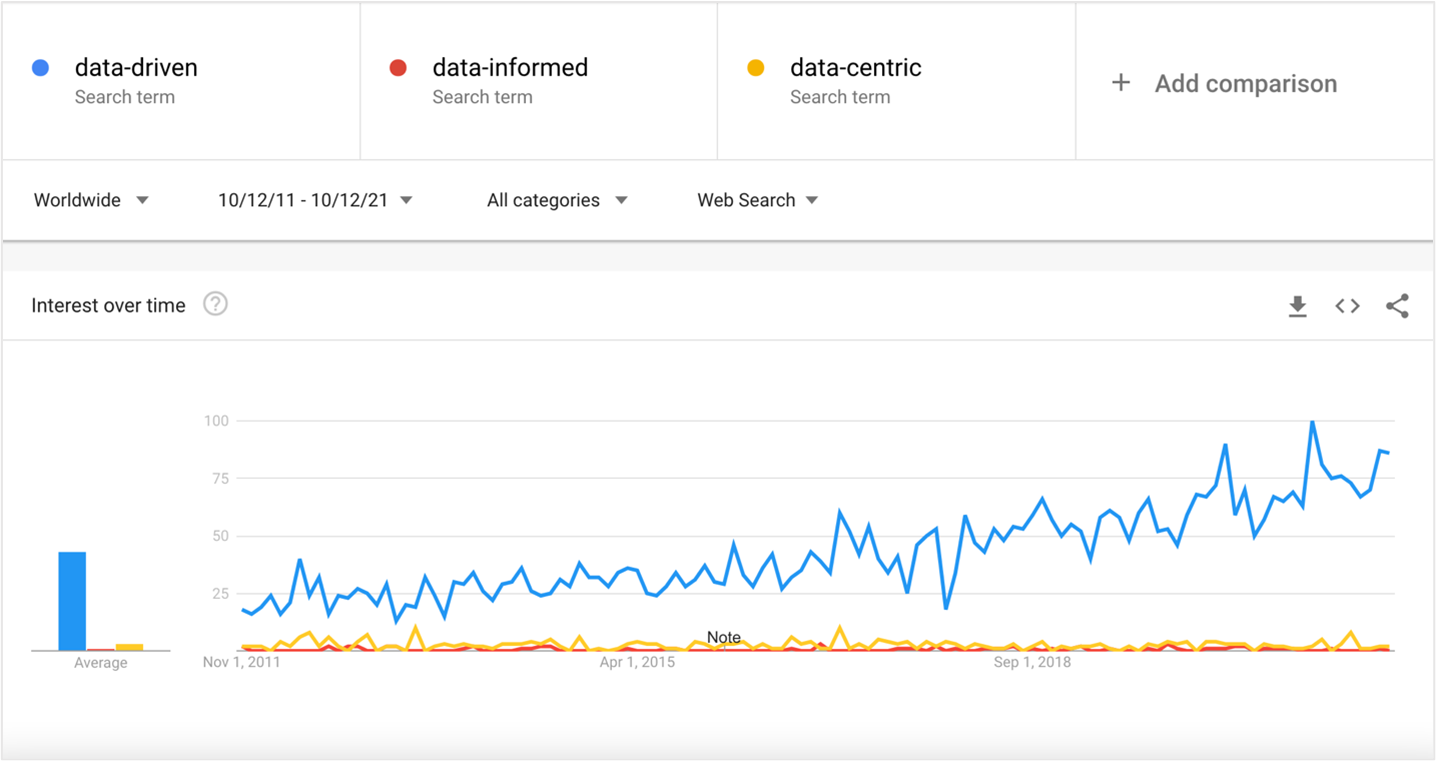

I’m thinking the word ‘data’ is popping up every few suggestions. ‘Data-driven’, or ‘data-informed’ are for sure at the top of my list (along with ‘agile’, of course, but that’s another story).

It’s hard to miss how much of a buzz there is around such a specific concept (even when compared to its alternatives) and how interest has grown over the past 10 years.

As experience design consultants at Commencis, we really do find that the notion of ‘becoming data-driven’ has an undeniable presence once we dive deep into our clients’ ecosystems. In this era, disrupting industries by utilizing data (something we all, constantly give birth to in terrifying quantities) is a powerful concept; enterprises dare not to fall back in this regard compared to other players.

In some cases, this can be considered a bandwagon situation. Most enterprises have a hard time finding a clear direction in making sense of data to inform the business. As Commencis Design team, we recently tackled this issue, in our area of expertise, with a client in the financial services domain.

Our main objective was to set up new ways of working that would help our client track relevant data, in order to inform product and experience strategy.

This was an exclusive challenge for our Design team. So, I wanted to take a moment and share the account of how we approached establishing data watching activities at the center of our client’s experience team.

The As-is State: Capabilities and Gaps

Each business is unique, and solutions must be tailor-made for their needs. We basically approached this challenge as we usually approach any business problem— by making use of the design thinking mindset.

We started out by surveying the as-is state and the analytics capabilities surrounding our client’s leading mobile app. At this point, the key activity was to run in-depth sessions on various data platforms and databases. This allowed us to accumulate know-how about:

- Data points being collected

- Data points missing

- Periods of data collection

- Format of data collection

- Reporting and engagement capabilities of platforms

- Employee use cases and pain points in relation to data platforms

Defining Data Requirements and Opportunities

Mapping out the current state helped us group resources: There were 1-2 key data platforms for measuring user behavior and product performance. Key platforms provided a broader range of abilities, and they were more familiar to employees. The rest of the tools complemented these key platforms; they were utilized for very specific purposes, less frequently.

Altogether we identified strong capabilities for keeping track of high-level journey performance (via funnels) and user demographics. Yet, there was a lack of detailed interaction data (e.g., on-screen events) and the ability to perform a comparative data study that would help feed product & experience strategy.

In tandem with our stakeholders, we envisioned a desired state— , addressing key platforms and novel data requirements (both qualitative and quantitative) to our future data watching activities. We also made sure that the tools at hand covered user engagement capabilities (e.g., feedback, offer, and notification mechanisms).

A unique aspect of this project for us was working with Dataroid as the big data analytics and customer engagement platform. Using Dataroid granted our design squad a head start for constructing the envisioned state since the know-how transfer between our teams was rapid. Coming from the same ecosystem we could communicate transparently over product roadmap and collaborate instantly with data professionals. Being able to feed new requirements into Dataroid, we contributed to the evolution of the platform to serve our client’s data measurement needs better.

It’s Alive: System Setup and Launch

Now this is the exciting part, where we began to execute what we envisioned.

Setting Up Standard Measurements and Activities: To launch this system fast, we wanted to start out with a compact set of data-watching activities to carry out. We initially decided on a few, standard data categories to track. These categories consisted of main indicators to be monitored periodically, to quantify product experience and analyze our user base.

- Product Use and Behavioral Data (e.g., Funnels, Common User Paths, Conversion Rates, On-Screen Event Interactions, Error Rates and Types, No. of Active Users, User Acquisition Rates, Uninstall Rates)

- Transaction Data (e.g., Most Frequent Transactions, Transaction Volume)

- User Satisfaction (e.g., NPS Scores, Store Ratings & Reviews, Social Media Sentiment Data)

- To obtain some of the satisfaction data, we set up user engagement tools and capabilities.

- User Demographics (e.g., Customer Segments and Affluence, Financial Product Ownership)

In addition to the must-get data categories above, we provided guidance for our client regarding how to identify new metrics to be measured for ad-hoc purposes.

Success Criteria and KPIs: Goal setting goes hand in hand with data monitoring. It is vital for measuring, documenting, and comparing product performance. For this, we created a template to help define success criteria in relation to the metrics mentioned above.

System Testing and Launch: Once the data watching framework was in place, we collaborated with our client’s analytics teams to ensure platform integrations and test environments were working as intended. It was critical to ensure that platforms could collect required data accurately. We tested the setup thoroughly, spending time and effort to fix any issues before kicking off data-watching activities.

Insight Generation & Taking Strategic Actions

With all the new practices in motion, our client’s experience team is now able to track, accumulate and compare product data in a more standardized manner.

In the new setup, analysis and reporting duties are being delegated to team members with previous data analysis experience. Their duties include raw data analysis, reporting insights, keeping track of & comparing success criteria/KPIs. As they dive deep into data to make sense of patterns, they generate essential input for the team.

The work of these team members is critical for documenting periodical snapshots of the product and its users— therefore informing strategic initiatives. With the help of data watching, the experience team can make strategic decisions and engage with users more confidently as data insights inform them. So far, by leveraging the data from the new system, we managed to inform decisions about information architecture and app navigation, to-be flows and designs for major financial transactions, and new research topics to explore.

Bonus: Advocating Multi-Disciplinary Teams

For a structure like this to work, promoting cross-functional teams is a foundational step. Teams that revolve around a digital product must internalize multi-disciplinary collaboration. Experience professionals are specialized in identifying and solving business/user problems. Data professionals, as Emily Glassberg Sands points out in her HBR article, complement the team skillset by “introducing feasible data-powered solutions”, analytics know-how and advanced data interpretations.

Going back to this subject of buzzwords and bandwagons— according to a 2021 study by NewVantage, the greatest challenge that enterprises face in becoming data-driven is people/business processes/cultural aspects (92.2%), rather than technology (7.8%).

To boost the adoption of our newly proposed ways of working, we wanted to contribute to the necessary organizational and cultural shift. Together with our client, we introduced:

- The Data Watcher role within experience teams— team members responsible for new data watching rituals, with data analysis and platform operation skills

- Educational sessions on operating data platforms and tools

- Weekly meetings between experience teams and:

- Advanced analytics teams

- Data platform/tool representatives

- Guides & documentation for Data Watchers to use (e.g., Data Platform Capabilities Chart, How To: Track Standard Metrics, How To: Form Success Criteria)

Next Steps and Closing Words

In the end, we put our focus on transforming the people and practices around digital products; this way product and experience strategy operations could become smarter, and data could be leveraged more efficiently in the process.

It takes time to embrace new ways of working— so rather than trying to create one perfect system, we observed that it is safer to start small and build the rest from there. We put new practices in motion with our client, knowing that this would be an iterative cycle. We have yet to add other layers and data points into our system. Regardless, this is a solid starting point because it introduces standardization and consistency into processes. Data is actually being utilized to drive strategic decisions (rather than staying just as a buzzword).

Systems, roles, frameworks are always open for improvement. We work together to refine this system based on business needs and enable our client’s employees as our collaboration continues.

Reading Time: 6 minutes

Don’t miss out the latestCommencis Thoughts and News.