Dark Side of Data: Privacy

Dark Side of Data: Privacy

Do you know anyone who uses Google Glass in his daily life recently? Maybe a few years later this question will evolve into a new one asking who remembers it. The general opinion about the story of Google Glass acknowledges it as a business failure and it is a reasonable inference if we do not fall into the error of ignoring the privacy aspects of this failure.

Privacy concerns regarding personal data are much higher today comparing to 10 years ago and continue to increase. But is it at a level that can collapse massive products of giant companies, although their attractive features?

I am in a strong doubt that the privacy notion in society is at a satisfying level. Technology goes fast and its subsequent effects show up years after. We all appreciate the value of AI and machine learning, but what about the privacy side of all these data? I call it the dark side of data and especially the people, whose work based on data, have a big responsibility on this matter..

Privacy in the Modern World

The very first description of privacy is presented by Warren&Brandeis in 1890. In their article, privacy is defined as “the right to be let alone”. In that era, the world was a different place and therefore they referenced photography devices and newspapers while asserting this definition. It is still valid today, however it is definitely insufficient to express in all aspects of the contemporary privacy concept.

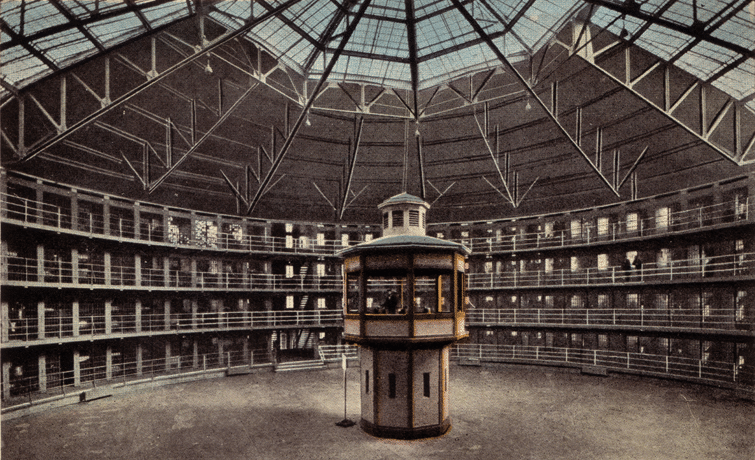

In the modern world, it is much more complicated to define privacy. In fact, it is vaguer than ever. In addition to the benefits of technology, we face side effects of recent developments closely. As it has been explained in the Discipline and Punish: The Birth of the Prison by Foucault, being under surveillance can cause people to keep themselves from creating new ideas and causes the person to question himself and initiates a self-censorship movement in the society, which helps to create an adequate environment to rule the society easily by the oppressive regimes.

Then, should we stop using technology in order to protect ourselves? It is absolutely not so simple to do this in such a technology addicted world. Let’s think about some instances, what would be your response to the following devices in terms of privacy violation:

- CCTV cameras around your home. –What if it prevents a terrorist attack?

- Location tracker in your mobile device. –What if it saves your life in case of a natural disaster?

- Heart rate measurer in your watch. –What if it warns you before a heart attack?

- An audio listener on your television. –What if it recommends a product that you are searching for?

Yes, I know the last one is not looking so indispensable but everything has pros and cons with different importance degrees in person and to make a conscious decision about your own data, to have a certain level of awareness is a must. Besides the numerous advantages of technology in our daily life, the question still stands: Who will protect our sensitive personal data from self-seeker tech companies, offensive governments or utilitarian hackers?

Keeping your privacy is hard because if you slip once, it’s out there forever.

— Aaron Swartz

It is clear that some recent cases such as Cambridge Analytica helped to gather the attention of society to ethical sides of data. Matt Turck explains it in his 2019 Data & AI Landscape report:

Perhaps more than ever, privacy issues jumped to the forefront of public debate in 2019 and are now front, left and center…. Our relationship to privacy continues to be a complicated one, full of mixed signals. People say they care about privacy, but continue to purchase all sorts of connected devices that have uncertain privacy protection. They say they are outraged by Facebook’s privacy breaches, yet Facebook continues to add users and beat estimates (both in Q4 2018 and Q1 2019).

Quis Custodiet Ipsos Custodes?

The fancy title above belongs to a Roman poet Juvenal and can be translated as “Who watches the watchmen?”. I use this quote to emphasize the interdependency between the privacy authorities such as governments and public institutions.

At this point, all kinds of data related actions are dependent on legal regulations like GDPR in one way or another, and it is certain that these legislations are a big step for privacy protection efforts, they are not exact solutions though.

On the other hand, foundations like Privacy International have a critical role in terms of organizing people and building common ethics of data. Moreover, thanks to the increasing popularity of data-related fields, some other institutions are publishing their own ethical codes to make clear this kafkaesque environment.

However, these institutions and crowd movements working on privacy rights are not sufficient on their own. In my opinion, these efforts require practical and moral support from the people in the middle of the data industry. All kind of data practitioners is responsible for their work in this field.

Dj Patil explains the ethical rules of data science with 5 C’s:

- Consent

- Clarity

- Consistency

- Control & Transparency

- Consequences & Harm

No matter how much you involved in data, paying attention to such data science ethics is the key to establish data privacy and make your work be respectful to universal human rights.

Ethics Guidelines for Trustworthy AI report by European Union emphasizes the value of human rights and its relationship with AI:

Equal respect for the moral worth and dignity of all human beings must be ensured. This goes beyond non-discrimination, which tolerates the drawing of distinctions between dissimilar situations based on objective justifications.

Additionally, the privacy side of AI is mentioned in the same report:

AI systems must guarantee privacy and data protection throughout a system’s entire lifecycle. …Digital records of human behaviour may allow AI systems to infer not only individuals’ preferences, but also their sexual orientation, age, gender, religious or political views. To allow individuals to trust the data gathering process, it must be ensured that data collected about them will not be used to unlawfully or unfairly discriminate against them.

The Oldest and Strongest Emotion of Mankind

In this article, I did not plan to mention harms of privacy violations, instead, I aimed to refer to some ethical responsibilities of conscious people. I know it is very hard to determine which action is ethical or not, due to the fact that it changes from case to case. Anyhow, ethical guidelines provide a roadmap for us and it is our liability to make our work respectful to human rights and data privacy. I believe that this liability has precedence over money, thus If someone requests you to break your principles, you must have the ability to refuse.

“The oldest and strongest emotion of mankind is fear, and the oldest and strongest kind of fear is fear of the unknown”

— H.P. Lovecraft

Reading Time: 8 minutes

Don’t miss out the latestCommencis Thoughts and News.

Emre Rençberoğlu / Data Scientist at Commencis

17/01/2020

Reading Time: 8 minutes

Do you know anyone who uses Google Glass in his daily life recently? Maybe a few years later this question will evolve into a new one asking who remembers it. The general opinion about the story of Google Glass acknowledges it as a business failure and it is a reasonable inference if we do not fall into the error of ignoring the privacy aspects of this failure.

Privacy concerns regarding personal data are much higher today comparing to 10 years ago and continue to increase. But is it at a level that can collapse massive products of giant companies, although their attractive features?

I am in a strong doubt that the privacy notion in society is at a satisfying level. Technology goes fast and its subsequent effects show up years after. We all appreciate the value of AI and machine learning, but what about the privacy side of all these data? I call it the dark side of data and especially the people, whose work based on data, have a big responsibility on this matter..

Don’t miss out the latestCommencis Thoughts and News.

Privacy in the Modern World

The very first description of privacy is presented by Warren&Brandeis in 1890. In their article, privacy is defined as “the right to be let alone”. In that era, the world was a different place and therefore they referenced photography devices and newspapers while asserting this definition. It is still valid today, however it is definitely insufficient to express in all aspects of the contemporary privacy concept.

In the modern world, it is much more complicated to define privacy. In fact, it is vaguer than ever. In addition to the benefits of technology, we face side effects of recent developments closely. As it has been explained in the Discipline and Punish: The Birth of the Prison by Foucault, being under surveillance can cause people to keep themselves from creating new ideas and causes the person to question himself and initiates a self-censorship movement in the society, which helps to create an adequate environment to rule the society easily by the oppressive regimes.

Then, should we stop using technology in order to protect ourselves? It is absolutely not so simple to do this in such a technology addicted world. Let’s think about some instances, what would be your response to the following devices in terms of privacy violation:

- CCTV cameras around your home. –What if it prevents a terrorist attack?

- Location tracker in your mobile device. –What if it saves your life in case of a natural disaster?

- Heart rate measurer in your watch. –What if it warns you before a heart attack?

- An audio listener on your television. –What if it recommends a product that you are searching for?

Yes, I know the last one is not looking so indispensable but everything has pros and cons with different importance degrees in person and to make a conscious decision about your own data, to have a certain level of awareness is a must. Besides the numerous advantages of technology in our daily life, the question still stands: Who will protect our sensitive personal data from self-seeker tech companies, offensive governments or utilitarian hackers?

Keeping your privacy is hard because if you slip once, it’s out there forever.

— Aaron Swartz

It is clear that some recent cases such as Cambridge Analytica helped to gather the attention of society to ethical sides of data. Matt Turck explains it in his 2019 Data & AI Landscape report:

Perhaps more than ever, privacy issues jumped to the forefront of public debate in 2019 and are now front, left and center…. Our relationship to privacy continues to be a complicated one, full of mixed signals. People say they care about privacy, but continue to purchase all sorts of connected devices that have uncertain privacy protection. They say they are outraged by Facebook’s privacy breaches, yet Facebook continues to add users and beat estimates (both in Q4 2018 and Q1 2019).

Quis Custodiet Ipsos Custodes?

The fancy title above belongs to a Roman poet Juvenal and can be translated as “Who watches the watchmen?”. I use this quote to emphasize the interdependency between the privacy authorities such as governments and public institutions.

At this point, all kinds of data related actions are dependent on legal regulations like GDPR in one way or another, and it is certain that these legislations are a big step for privacy protection efforts, they are not exact solutions though.

On the other hand, foundations like Privacy International have a critical role in terms of organizing people and building common ethics of data. Moreover, thanks to the increasing popularity of data-related fields, some other institutions are publishing their own ethical codes to make clear this kafkaesque environment.

However, these institutions and crowd movements working on privacy rights are not sufficient on their own. In my opinion, these efforts require practical and moral support from the people in the middle of the data industry. All kind of data practitioners is responsible for their work in this field.

Dj Patil explains the ethical rules of data science with 5 C’s:

- Consent

- Clarity

- Consistency

- Control & Transparency

- Consequences & Harm

No matter how much you involved in data, paying attention to such data science ethics is the key to establish data privacy and make your work be respectful to universal human rights.

Ethics Guidelines for Trustworthy AI report by European Union emphasizes the value of human rights and its relationship with AI:

Equal respect for the moral worth and dignity of all human beings must be ensured. This goes beyond non-discrimination, which tolerates the drawing of distinctions between dissimilar situations based on objective justifications.

Additionally, the privacy side of AI is mentioned in the same report:

AI systems must guarantee privacy and data protection throughout a system’s entire lifecycle. …Digital records of human behaviour may allow AI systems to infer not only individuals’ preferences, but also their sexual orientation, age, gender, religious or political views. To allow individuals to trust the data gathering process, it must be ensured that data collected about them will not be used to unlawfully or unfairly discriminate against them.

The Oldest and Strongest Emotion of Mankind

In this article, I did not plan to mention harms of privacy violations, instead, I aimed to refer to some ethical responsibilities of conscious people. I know it is very hard to determine which action is ethical or not, due to the fact that it changes from case to case. Anyhow, ethical guidelines provide a roadmap for us and it is our liability to make our work respectful to human rights and data privacy. I believe that this liability has precedence over money, thus If someone requests you to break your principles, you must have the ability to refuse.